During my recent renovation, I added two additional cameras to my new space, at the two points of ingress. This was something of a departure as these were also the first Power Over Ethernet(POE) cameras I’ve had installed, as I had someone on-site available who could run the cables cleanly.

I’ve tried a variety of ecosystems for cameras, both for myself and others. Many f them push you toward subscription-based cloud services, which features like video history, motion detection, and notifications only work fully if you pay monthly. Some of them barely provide any features without paying, despite the fact you bought the device. Even when offering local options, this is often storage with a microSD card in the camera, which is clunky, slow, and unreliable.

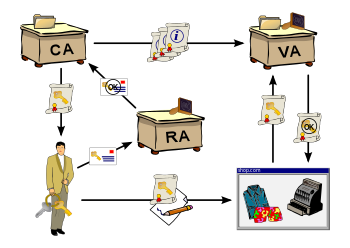

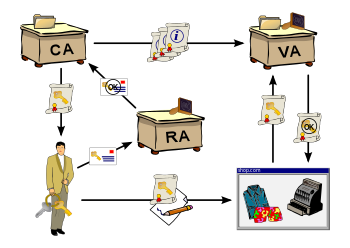

That is why I decided to go with a network video recorder. A server that takes the feeds from all the cameras and stores the recordings. You can buy commercial NVRs you can purchase and install in your house, including some that integrate with the specific hardware cameras you bought, but I wanted a solution that aligned with my philosophy of self-hosted, privacy first smart home tech.

So I chose Frigate.

Why Frigate?

Frigate is an open-source NVR designed for real-time object detection all running on local hardware. It is deeply customizable and can be tuned to only record what matters to you – people, cars, or animals, depending on what zones and filters you decide.

For example, one of my outdoor camera flagged every pedestrian across the street, which is well outside of the zone I am concerned about. I can narrow the zone to only my property, to dramatically reduce noise in footage and alerts.

Frigate, recently added:

- facial recognition

- license plate recognition.

- View-only user roles for shared access

Everything is processed locally, with no cloud dependency.

Frigate+: Smarter Detection, Optional Subscription

To improve detection, you can also subscribe to Frigate+, a $50/year subscription which offers better trained models for detection. These are trained by other users of Frigate. You can participate by submitting false positives and other information voluntarily. If you cancel, you get to keep the downloaded models, you just stop getting updates.

This helps support the developers and doesn’t lock you into a traditional subscription model.

Frigate Notifications

One gap in the core Frigate setup is the lack of built-in robust multi-platform notifications. That’s where another piece of software, Frigate- Notify, comes in. It ofers all of the notification options I might want.

- Rich notifications

- Cross-platform delivery including mobile, desktop, and messaging apps

- Fully customizable

Next Steps For My Frigate NVR

Inspired by how well the new system is performing, I plan to replace more of my older Wi-Fi cameras with wired POE models for improved reliability. Wired cameras streaming directly to my NVR reduces lag, improves reliability, and gives me full control over recording, storage, and alerts—without the cloud.

If you’re tired of cloud lock-in and unreliable Wi-Fi cams, and you want a privacy-respecting, smarter surveillance system, Frigate + POE may be the combo you’ve been looking for.

In a continuing effort to get the best combination of services and pricing, I often review my choice of provider. While it is a pain to migrate services, things do change over time.

In a continuing effort to get the best combination of services and pricing, I often review my choice of provider. While it is a pain to migrate services, things do change over time.