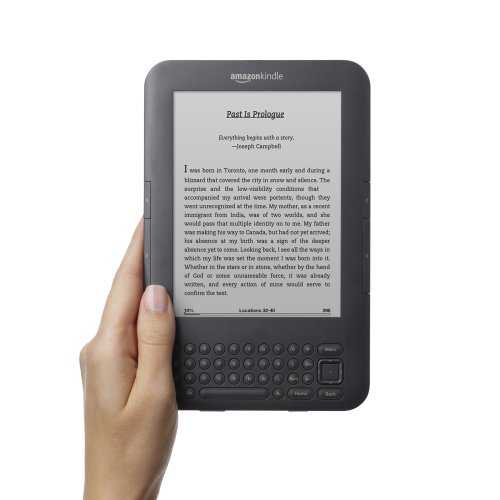

The truth of the matter is…Amazon has been slowly reducing the price of the Kindle because their interest in its manufacture is the purchase of content, not the purchase of the device itself.

Personally, we’re skeptical about free…with the exception of bundling deals where it is part of a larger purchase. We think the base device will likely settle somewhere between $30 and $50, making it a basically disposable purchase.

Amazon is feeling more downward pressure because more affordable tablets are coming into the mainstream. Some people use these devices over the Kindle, many use it in addition to the Kindle. The e-ink Kindle offers incredible battery life, simplicity, and distraction-free reading. There will always be a place for it. And at a price point that is as close to free as realistic for Amazon to achieve.

Either way, the Kindle Fire is now sold out, as well as the Kindle Touch, and Amazon has an event scheduled for next week, where it is said we will see new Kindles.

What do you think?

Related articles

Amazon is Getting Those 180 Thousand Kindle Exclusive eBooks Damned Cheap(the-digital-reader.com)

Amazon is Getting Those 180 Thousand Kindle Exclusive eBooks Damned Cheap(the-digital-reader.com)

Amazon’s Kindle Touch is No Longer Available(teleread.com)

Amazon’s Kindle Touch is No Longer Available(teleread.com)

Kindle Touch unavailable on Amazon.com, new models on the way?(theverge.com)

Kindle Touch unavailable on Amazon.com, new models on the way?(theverge.com)

Planning to buy a new Kindle? Wait(gigaom.com)

Planning to buy a new Kindle? Wait(gigaom.com)

Why Amazon’s Kindle Will Someday Be Free(newser.com)

Why Amazon’s Kindle Will Someday Be Free(newser.com)

We’ve had a long road in cloud music. Back in December of last year, we

We’ve had a long road in cloud music. Back in December of last year, we